Hive: Difference between revisions

(→TODO) |

(→Users) |

||

| (24 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

Hive is a VM on [[Melange]] that runs my [[TrueNAS]] storage. | |||

== Pools == | == Pools == | ||

{| class="wikitable" | {| class="wikitable" | ||

! Pool | ! Pool | ||

| Line 47: | Line 9: | ||

! Drives | ! Drives | ||

|- | |- | ||

| | |mine | ||

| | |43 TB | ||

| style="color: | | style="color:green" |raidz | ||

| | |8TB ssd x7 | ||

|- | |- | ||

|safe | |safe | ||

| | |5.2 TB | ||

| style="color:green" |raidz | | style="color:green" |raidz | ||

|1 TB ssd x7 | |1 TB ssd x7 | ||

|} | |} | ||

NOTE that there is an eighth 8TB drive matching the mine array in [[Bluto]]. Harvest it from there if one of the 7 dies. | |||

=== Mine Drives === | |||

Unfortunately I opted for Samsung SSD 879 QVO drives. The ONLY 8TB SSD option available, for a long time now. | |||

Problems: | |||

* They are not designed to survive lots of reads/writes. | |||

* Because they are so big, it is very hard to maintain them, with scrubs taking hours, and resilvers BARELY able to complete after days. | |||

Yep, they suck. And now I am stuck with them. So I will keep getting new ones and returning the bad ones, until I can move to MVME drives on a new PCIe card. | |||

== Hardware == | == Hardware == | ||

Latest revision as of 22:32, 13 January 2025

Hive is a VM on Melange that runs my TrueNAS storage.

Pools

| Pool | Capacity | Type | Drives |

|---|---|---|---|

| mine | 43 TB | raidz | 8TB ssd x7 |

| safe | 5.2 TB | raidz | 1 TB ssd x7 |

NOTE that there is an eighth 8TB drive matching the mine array in Bluto. Harvest it from there if one of the 7 dies.

Mine Drives

Unfortunately I opted for Samsung SSD 879 QVO drives. The ONLY 8TB SSD option available, for a long time now.

Problems:

- They are not designed to survive lots of reads/writes.

- Because they are so big, it is very hard to maintain them, with scrubs taking hours, and resilvers BARELY able to complete after days.

Yep, they suck. And now I am stuck with them. So I will keep getting new ones and returning the bad ones, until I can move to MVME drives on a new PCIe card.

Hardware

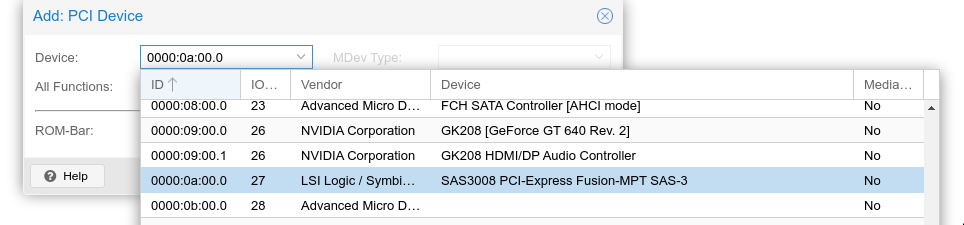

- LSI 8-drive SAS board passed through proxmox to hive as "PCI Device":

- 7 1 TB Crucial SSDs

Plugged in to SATA 1, 2, 3 and U.2 1, 2, 3, 4. NOTE: to get U.2 drives to be recognized by Melange ASUS mobo required a BIOS change:

Bios > advanced > onboard devices config > U.2 mode (bottom) > SATA (NOT PCI-E)