Hive

TODO

- copy bitpost/softraid/* to safe/

remove backup/old/box_images, backup/development-backup-2019-01, development-backup-2019-01/bitpost.com/htdocs/temp/Billions/, development-backup-2019-01/causam/git development/bitpost.com/htdocs/8675309/mame/roms (symlink??) development/bitpost.com/htdocs/files/photos (symlink??) development/config (etc - prolly needed but not the .git folders, they are YUGE) development/postgres/dumps (selectively, i don't need 1000 old databases) projects/GoPro/export (check out what goodies lie there!) projects/iMovie (check out what goodies lie there!) software/games (mame, stella roms, etc.)

......

set up cron to pull all repos to safe/ every night

- update all my code on softraid; remove both the old softraid drives and give dan for offsite backup

- move mack data to safe, remove torrent symlinks, update windoze mapped drive and kodi

- stop rtorrent, move sassy to safe, update edit_torrent_shows links, update torrent symlinks, update all SharedDownload folders (via mh-setup-samba!)

- move splat data to safe, update edit_torrent_shows links, update torrent symlinks

- remove mack, sassy, splat; safe should now have 478 + 200 + 3100 = 3.8 TB used (ouch)

- DEEP PURGE; we will need to move softraid, reservoir, tv-mind, film to safe and grim; this will require deep purges of all shit; check that there are no symlinks

- continue migration until we have 7 slots free (16 - 7 safe - 2 grim)

- add 7 new BIG ssds in new raidz array

- rinse and repeat

Overview

FreeNAS provides storage via Pools. A pool is a bunch of raw drives gathered and managed as a set. My pools are one of these:

- single drive: no FreeNAS advantage other than health checks

- raid1 pair: mirrored drives give normal write speeds, fast reads, single-fail redundancy, costs half of storage potential

- raid0 pair: striped drives gives fast writes, normal reads, no redundancy, no storage cost

- raid of multiple drives: FreeNAS optimization of read/write speed, redundancy, storage potential

The three levels of raid are:

- raidz: one drive is consumed just for parity (no data storage, ie you only get (n-1) storage total), and one drive can be lost without losing any data; fastest

- raidz2: two drives for parity, two can be lost

- raidz3: three drives for parity, three can be lost; slowest

Pools

- Drives

| Pool | Capacity | Type | Drives |

|---|---|---|---|

| sassy | 0.2 TB | single | 250GB ssd |

| splat | 3.6 TB | raid0 | 1.82 TB hdd x2 |

| mack | 0.9 TB | single | 1 TB ssd |

| reservoir | 2.7 TB | single | 2.73 TB hdd |

| grim | 7.2 TB | raid0 | 3.64 TB ssd x2 |

| safe | 6 TB | raidz | 1 TB ssd x7 |

Datasets

Every pool should have one dataset. This is where we set the permissions, important for SAMBA access.

Dataset settings:

name #pool#-ds share type SMB user m group m ACL who everyone@ type Allow Perm type Basic Perm Full control Flags type Basic Flags Inherit

Share each dataset as a Samba share under:

Sharing > Windows Shares (SMB)

Use the pool name for the share name.

Use the same ACL as for the dataset.

Hardware

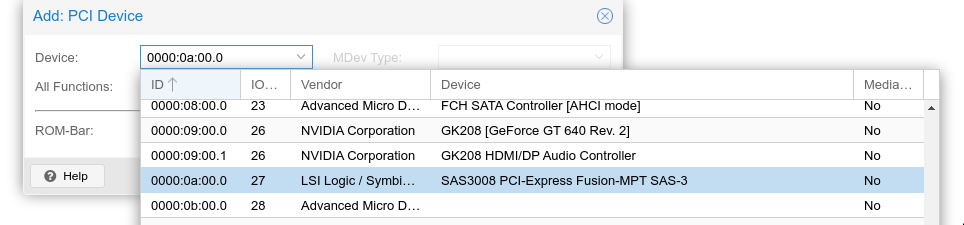

- LSI 8-drive SAS board passed through proxmox to hive as "PCI Device":

- 7 1 TB Crucial SSDs

Plugged in to SATA 1, 2, 3 and U.2 1, 2, 3, 4. NOTE: to get U.2 drives to be recognized by Melange ASUS mobo required a BIOS change:

Bios > advanced > onboard devices config > U.2 mode (bottom) > SATA (NOT PCI-E)