Hive: Difference between revisions

(→TODO) |

No edit summary |

||

| (35 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== TODO == | == TODO == | ||

* | * run mh-setup-samba everywhere | ||

* | ** x cast | ||

* | ** x bandit | ||

* | ** glam | ||

* update kodi shares to remove old, add mine | |||

** x cast | |||

** case (needs kodi update) | |||

* update windoze mapped drive to point to mine/mack (get it to persist, ffs) | |||

* move tv-mind, film to mine, pull them out of bitpost, harvest (they are 4 and 6TB!!) | |||

* SHIT i should have burn-tested the drives!!! I AM SEEING ERRORS!! And I won't be able to fix once i have them loaded up... rrr... the true burn tests are destructive... since i'm an impatient moron, i will just have to do SMART tests from TrueNAS UI... | |||

da10 SHORT: SUCCESS | |||

da13 SHORT: SUCCESS | |||

(keep going) | |||

== | == Pools == | ||

{| class="wikitable" | {| class="wikitable" | ||

! Pool | ! Pool | ||

| Line 27: | Line 22: | ||

! Type | ! Type | ||

! Drives | ! Drives | ||

|- | |- | ||

|grim | |grim | ||

| Line 52: | Line 27: | ||

| style="color:red" |raid0 | | style="color:red" |raid0 | ||

|3.64 TB ssd x2 | |3.64 TB ssd x2 | ||

|- | |||

|mine | |||

|43 TB | |||

| style="color:green" |raidz | |||

|8TB ssd x7 | |||

|- | |- | ||

|safe | |safe | ||

| | |5.2 TB | ||

| style="color:green" |raidz | | style="color:green" |raidz | ||

|1 TB ssd x7 | |1 TB ssd x7 | ||

|} | |} | ||

NOTE that there is an eighth 8TB drive matching the mine array in [[Bluto]]. Harvest it from there if one of the 7 dies. | |||

== Hardware == | |||

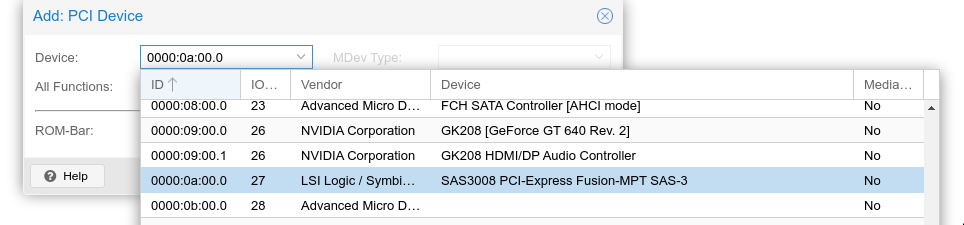

* LSI 8-drive SAS board passed through proxmox to hive as "PCI Device": | * LSI 8-drive SAS board passed through proxmox to hive as "PCI Device": | ||

Latest revision as of 16:31, 8 October 2023

TODO

- run mh-setup-samba everywhere

- x cast

- x bandit

- glam

- update kodi shares to remove old, add mine

- x cast

- case (needs kodi update)

- update windoze mapped drive to point to mine/mack (get it to persist, ffs)

- move tv-mind, film to mine, pull them out of bitpost, harvest (they are 4 and 6TB!!)

- SHIT i should have burn-tested the drives!!! I AM SEEING ERRORS!! And I won't be able to fix once i have them loaded up... rrr... the true burn tests are destructive... since i'm an impatient moron, i will just have to do SMART tests from TrueNAS UI...

da10 SHORT: SUCCESS da13 SHORT: SUCCESS (keep going)

Pools

| Pool | Capacity | Type | Drives |

|---|---|---|---|

| grim | 7.2 TB | raid0 | 3.64 TB ssd x2 |

| mine | 43 TB | raidz | 8TB ssd x7 |

| safe | 5.2 TB | raidz | 1 TB ssd x7 |

NOTE that there is an eighth 8TB drive matching the mine array in Bluto. Harvest it from there if one of the 7 dies.

Hardware

- LSI 8-drive SAS board passed through proxmox to hive as "PCI Device":

- 7 1 TB Crucial SSDs

Plugged in to SATA 1, 2, 3 and U.2 1, 2, 3, 4. NOTE: to get U.2 drives to be recognized by Melange ASUS mobo required a BIOS change:

Bios > advanced > onboard devices config > U.2 mode (bottom) > SATA (NOT PCI-E)